CSSS 2009 Santa Fe-Projects & Working Groups

From Santa Fe Institute Events Wiki

| CSSS Santa Fe 2009 |

Project Groups

Foraging on the move

Allison Shaw: I've moved the discussion of this idea to a separate project page -- see (Foraging on the move) for more detail and feel free to join in!

Problem solving and mating - are they similar?

The discussions on this project have been moved to a separate page: Problem solving

Interacting distribution networks

Moved to its own page: Interacting distribution networks

The Effect of Gossip on Social Networks

Moved to a separate page: Modeling gossip networks

Radicalization of Islamic Diasporas and Reactive Control Theoretical Approach

See Radicalization

From Topology to Response

Spiking Networks on the Cusp of Chaos

Please click the title to be transported to the project page.

Modeling behaviors between students and teachers

Update and Details about this project, please click here ! Modeling behaviors between students and teachers

Determining spatial contact networks for pathogen transmission

Project Page: Spatial contact networks

Human searching strategies in an heterogeneous environment

Project page: Human searching strategies in a heterogeneous environment

Brainstorming

Disease ecology of media hype

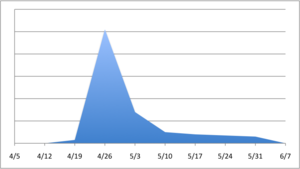

How much and event gets covered in the news often appears to depends on how much it is already covered in the news. Often this distorts reality. For example, the number of searches for "swine flu" (a proxy for media hype), do not reflect the patterns of disease spread over the same period.

While the number of flu cases increased, the searches died off, as interest in the topic waned. It would be interesting to follow the origin, spread and extinction of media hype, maybe applying models commonly used to study the spread of disease. Alexander Mikheyev

You could look at the dynamics from agent-based (ABM) perspective. There is a recent paper by Epstein and colleague that focuses on the impact of fear on disease from agent-based perspective, but does not capture this dynamics. However, my collaborator and I are currently writing a paper on the same problem you just outline from mathematical epidemiological perspective. Our results show some interesting dynamics. I think its extension in ABM might provide richer dynamics. Another relevant paper: S. Funk, E. Gilad, C. Watkins and V.A.A Jansen (2009) the spread of awareness and its impact on epidemic outbreaks. PNAS early edition Alhaji Cherif

Complex networks of acupuncture points around the body

what this project supposed to do is to set up the correlations of 720 acupuncture points complex network to do some interesting research on it. And what is important is such kind of work hasn't been done as i know. Feel free to have some discusstions on it to excite some good ideas. You could search "acupuncture" on wiki to get some general knowledge, Part of them are as belows.

Acupuncture is a technique of inserting and manipulating fine filiform needles into specific points on the body to relieve pain or for therapeutic purposes. The word acupuncture comes from the Latin acus, "needle", and pungere, "to prick". In Standard Mandarin, 針砭 (zhēn biān) (a related word, 針灸 (zhēn jiǔ), refers to acupuncture together with moxibustion).

According to traditional Chinese medical theory, acupuncture points are situated on meridians along which qi, the vital energy, flows. There is no known anatomical or histological basis for the existence of acupuncture points or meridians. Modern acupuncture texts present them as ideas that are useful in clinical practice. According to the NIH consensus statement on acupuncture, these traditional Chinese medical concepts "are difficult to reconcile with contemporary biomedical information but continue to play an important role in the evaluation of patients and the formulation of treatment in acupuncture."

The earliest written record that is available about acupuncture is Huangdi Neijing (黄帝内经 or Yellow Emperor's Inner Canon), which suggests acupuncture originated in China and would explain why it is most commonly associated with traditional Chinese medicine (TCM). Different types of acupuncture (Classical Chinese, Japanese, Tibetan, Vietnamese and Korean acupuncture) are practiced and taught throughout the world. Guimei Zhu

Acupuncture/Chinese Alternative Medicine

Here are some more papers regarding research that has been done on acupuncture. Some network analysis has been done. Very interesting stuff!

Acupuncture Overview: Here is an overview of acupuncture from a journal entitled "Alternative Therapies" in 1998.

Acupuncture and Graph Theory: This paper was written in "Progress in Natural Science" in 2009 which implements the use of graph theory to make a model to understand the effects of acupunture on brain function.

Body-Wide Cellular Network of Fibroblast Cells: A paper relating the study of a body-wide network of fibroblasts to acupuncture. Written in "Histochemistry and Cell Biology" in 2004.

Acupuncture-Psychosocial Context And another which studies the effects of the procedure. Written in "Advanced Access Publication" in 2008.

Enjoy! Karen Simpson

Housing prices.

The New York Times has a set of dramatic graphs showing the rise and fall of home prices in select cities. Again these graphs reminded me a bit of those produced by susceptible-infected-recovered models of disease spread. Maybe there is something to it? Or maybe this phenomenon is already well understood by economists? Alexander Mikheyev

Movie Turnouts

Which would be the more popular movie -- a combination of Steven Spielberg, Eddie Murphy and Gwyneth Paltrow, or Woody Allen, Dwayne 'the rock' Johnson, and Tom Cruise? Using the adaptation and turnout models presented by Nathan Collins, could we construct a prediction for gross movie receipts or even movie ratings? Nathan Hodas

Climate network model.

Requires someone with climatology knowledge. Lenton et al. recently published a paper listing 'policy-relevant' 'tipping elements' in the Earth's climate system and the temperature tipping points required to initiate them. (Basically, the tipping elements are components of the climate system where a bifurcation leading to a different stable state can be induced. The tipping point is the temperature at the bifurcation.) Surely, many of these tipping elements would have feedback effects on other tipping elements or the climate system as a whole. I would like to make a network model of these tipping elements and look at the tipping (or other) dynamics of the whole system. But Lenton et al. don't discuss these feedbacks much in their model, so we need some expert knowledge. Steven Lade

Almut Brunner Sounds like a very challenging project. As climate modelling is a very broad issue in itself, I would suggest to look at a specific example of threshold values in climate models, e.g. changes in rainfall patterns in Saharan environment and its impact on vegetation cover and finally desertification. It is known, for example, that if the rainfall amount in the Sahara drops below a critical value of 100mm/yr, the vegetation cover will change extremely due to reduced water availability and hence cause irreversible environmental changes. But I am not sure, if we could model that due to complicated/complex feedback mechanism and limited access to data. Another idea could be to simulate the other extreme - increased rainfalls. Is there a critical threshold value/tipping point causing extreme floods and environmental hazards in exposed, vulnerable landscapes (e.g. lowlands, coastal regions or even around here in the Grand Canyon region for which we can certainly get some nice data?). Looking forward to discuss these issues a bit more with you. What kind of model did you have in mind for simulating tipping point and feedback mechanism?

Steven Lade I wasn't thinking of modelling any of the physics or ecology directly, but at a coarse level with something like

node_i (time) = f_i[global temperature(time - delay_i), outputs of other nodes(time - delay_i)] global temperature(time) = IPCC[time] + g[outputs of nodes(time)]

Each of the nodes would be a local tipping element. Lenton et al. already provide the global average temperature thresholds for the tipping elements and the time delay for the element to actually tip. We can then specify the part of the function f_i[global temperature] with something like a sigmoidal function. For the base time course of global temperature we could use IPCC projections or hold it fixed and just see what the feedbacks do to it. What Lenton et al. doesn't specify in detail is these feedbacks -- i.e. the dependence of nodes and the global temperature on the other nodes. Someone suggested to me that for a more abstract study we could look at the behaviour of the system over a range of possible feedbacks.

Synchronised magma oscillations

Requires someone with geological knowledge In a recent paper, which was also reported by New Scientist, Mjelde and Faleide report on seismological measurements that allowed them to infer past rates of magma flow in the plume generally though to rise beneath Iceland. When the plume is strong it thickens the Earth's crust at this point. They found the crust thickened approximately every 15 million years, and inferred that the magma plume must also have pulsed with this period. These pulsations have also been observed in the crust under Hawaii, with almost exactly the same period! Mjelde and Faleide hypothesise that there must be some giant heating oscillation in the Earth's core which drives these two oscillations at very different parts of the Earth. But other geologists are skeptical because of the huge energy required and lack of other evidence of such oscillations. But all this reminds me of the synchronisation phenomenon, where coupled oscillators, even if only weakly coupled, tend to synchronise. So the oscillations under Hawaii and Iceland may be generated independently, but have some weak coupling that has led them to synchronise. We can make coupled oscillator models, that's easy, but someone to provide more context on possible forms of coupling and their parameterisation is more what we need. They only observe about three periods of this oscillation and the data is quite imprecise so we can't do much direct data analysis, unfortunately. Steven Lade

Implementing Synchronization using NetLogo

Since I just learned about NetLogo, I look forward to the tutorial sessions and would like to implement a synchronization scheme of a group of entities. If I find out how the fireflies synchronize themselves, then that would be an option. Of course, I'll be surprised if this has not been done before in NetLogo. I'll welcome any help and suggestions.Mahyar Malekpour

Mahyar Malekpour: Update June 16, 2009 - Someone asked if there is an application for this. The answer is yes, categorically, any self-organizing system needs synchronization. However, my interest here are visualization and exploration using agent-based tools. I don not intend to develop a solution to this problem, rather build on an existing agent-based model (if there is any) and enhance its capabilities.

Massimo Mastrangeli: as someone said, there is vast literature on synchronization available; you can for example get a taste in Sync by Steven Strogatz (also, check out his talk at TED). I am quite interested in the idea.

Contagion in Networks

Peter Dodds discussed contagion in a simplified network in which all the nodes have certain amount of threshold for changing. I thought that if the thresholds are various, that can lead to new behaviors in group level. For instance, people in different cities might have different resistances against inputs. Hence, we might see that an epidemic issue spreads in one city but not in the other. Consider the cities as nodes in a higher level network. This means that we might see the same patterns in this higher level. Different nodes (cities) react differently to external inputs. This also seems to be a more realistic model of the real world. Any comments, suggestions or discussions, even in the order of minutes are appreciated! Roozbeh Daneshvar

- Perhaps this concept could be related to ecological food webs and the success of invasive species. The "epidemic" would be an introduced species, and the "spreading of the disease" would be how successful the alien species is within that food web. There are plenty of journal articles attempting to study the success of biological invasion, and I think in addition to looking at the food web networks, generating an agent based model would be ideal! It could be related to your idea, Roozbeh, in that the cities represent "habitats", and the "epidemics" represent the introduction of an alien species.

- Karen Simpson: Introducing Agent-Based Modeling: Several concepts (external and internal inputs) have been discussed that are said to contribute to whether or not a species succeeds in it's novel environment. These include: how many individuals are in the founding population, the "strength" of any competing organisms (this would be 0 is there are no competitors), the amount resources available, the ability of organism to adapt to the new environment, physiological advantages of new species over native species (i.e. defense mechanisms), and many more. I think we could find properties of ecological foodwebs, and then introduce a species (or epidemic) into the network and see what happens based on these inputs. Let me know your thoughts.

- Roozbeh Daneshvar: Karen, this sounds interesting to me and I'd like to know more. Shall we have more discussion over it on Tuesday?

Linking topology to dynamic response in small networks

Imagine a small (3-7 nodes) network where every node represents a protein species, and every (directed) edge the activation relation between the proteins (i.e. A ---> B means that the protein A can react with B and activate it). Furthermore, assume that there are two numbers associated with every node: the total number of protein molecules of the given type and the fraction of the active forms. Finally, let two nodes, R and E, be special and call them the Receptor and the Effector. What you have is a crude model of intracellular signalling.

This paper considers such models and exhaustively classifies all the possible topologies (i.e. wirings) with respect to the activation pattern of the Effector in response to a standardized signal sent by the Receptor. The goal of our project would be to do the same experiment using different tools, and potentially obtain different results. The main difference would be to use stochastic (rather than deterministic) dynamics to determine the response. As the signalling systems operate with relatively low numbers of molecules, stochastic effects may be important. If we do this and have time left, we can try pushing it further and consider the issues of robustness and evolvability of these networks.

To put a nasty spin on the project, I propose that we use an obscure computational technique called model checking to get the response profile of a network; partly just because we can, but partly also because it nicely deals away with the need of explicitely simulating and averaging of stochastic models.

Now, a couple of final remarks:

- Don't think of it as a network project. All networks involved will be rather trivial.

- The project group should include a biologist (to do sanity checks) and somebody familiar with parallel computing.

- Model checking is (very) expensive computationally, we will probably need a cluster.

- I have all the original results from the paper mentioned.

- The tool to use would probably be PRISM.

- Marek, this dovetails nicely with my interests & I'd like to talk more about it with you. I have experience with -- and access to! -- a parallel cluster. No experience with prism, however. Rosemary Braun

- OK then, I am going to start a project page Marek

If you did not do this yet, I suggest you to have a look at "Small Worlds" by Duncan Watts. It containts useful information, models and mathematics on the topic. -Massimo Mastrangeli

Pattern Generation in Dynamic Networks: Elucidating Structure-to-Behavior Relationships

Many sorts of networks produce patterns when dynamics are active on them. The brain is a great example. In fact, the patterns generated in your head are not only interesting and perhaps beautiful, but crucial to your success in surviving and thriving in the world. Gene or protein networks are another example. Change a few genes around and suddenly your stuck with a nasty disease.

One question we can ask is: how do the patterns of behavior (or "function" if you want to presume as much) change when we change the structural connections in the dynamic network from which they emerge? Alternatively, for a given type of behavior (set of similar patterns), is there a class of networks which all exhibit this behavior? What is common between all of those networks? What is the underlying mechanistic explanation for how they all behave this way?

Some potential topics:

- Genetics - what patterns of proteins emerge depending on what genes are where on a genome? (maybe other questions ... I'm not a geneticist!)

- Spiking neural networks - I have a lot of experience with this.

- Kauffman-like Boolean networks

- Population biology / food webs?

- Economics?

We might even think of embedding this in some physical space. Perhaps neural nets drive the 'muscle' movements of creatures (a la the Karl Sims 'Creatures' video we saw in Olaf Sporn's lecture) or the motors of vehicles.

I have experience in Python, Java, Matlab and a few other languages and am open to working with whatever (NetLogo?). I also have experience with Information Theory, which could come in handy in digesting and analyzing the patterns.

Clearly this project could go multiple directions. Feel free to add ideas/comments here...

- Jacopo Tagliabue: Premise: I don't know if it makes sense at all, and even if it fits the project. I was thinking that just not the fact that some areas are connected makes a difference, but also the way they are connected. For example, the synchronization of neurons plays a pivotal role in the proper behaviour of the brain: when some disease (such as multiple sclerosis) leads to demyelination, the signals in the axioms can no more be processed at the right speed. The upshot is progressive cognitive and physical disability. Can we use agend-base models and/or network analysis to better understand what happens (and why, for example, multiple sclerosis may evolve in four different ways)? If someone with some neuroscience background would like to talk about this (or just explain why this doesn't make sense at all),I'd be glad to learn!

Karen Simpson: This is interesting to me, especially in the case of food webs merely because that is what I am most familiar with. Within an ecological community, there are certain links that depict the dynamics within that community. If we remove a link (or change it somehow, maybe by redirecting it through another organism), the community is stressed. The community may be resilient and the underlying dynamics may shift back to equilibrium. On the other hand, it may lead to the extinction of certain organisms. One way that these links are changed is by introducing another node into the system, this node representing an introduced species. The success of this species depends largely on its position in the food web and its connecting links. My question (from an ecological perspective) is: Does introducing a non-native species result in different underlying dynamics and patterns? My intuition says yes, but it largely depends on the ability of the non-native organism to succeed in it's new environment. (See my thoughts under "Contagion in Networks" for more on this topic)

- Massimo Mastrangeli: The topic looks very interesting. I read a lot on Kauffmans' approach and I would probably like to get dirty hands on it. The idea in my opinion is to create a network with a plausibly vast and interesting state space, and explore it using some tools. Analysis of the dynamics of the transitions from one steady state to another might be interesting.

All sorts of (mostly US-centric) data

For fun, brainstorming, and sanity-checking: data.gov has tons of data collected by the US Gov't.

Modularity in complex systems - why is it there and what does it do?

Evolving systems often switch from being highly modular to highly integrated, and vice versa. Why is this so and how does it happen? Wendy Ham and Roozbeh Daneshvar.

- Roozbeh Daneshvar: Today in a slide of Olaf Sporns presentation, I noticed a graph showing the relation between order/disorder and complexity. When the system becomes too much ordered or too much disordered, in both cases complexity reduces. There is somewhere in between that we have the most amount of complexity. I was thinking that the emergence of modules are also a movement towards orderliness. But, complex systems do not go beyond a limit and still keep some non-modularity. So, Wendy, we have contrasting views on modularity. But maybe we will meet somewhere in between, where we have the most amount of complexity!

- Question: Why modularity changed in human societies? Did the behavior of complexity change?

- Steven Lade Wendy, can you give some examples for evolving systems moving from "highly modular to highly integrated"? Also Roozbeh I don't understand what you mean by "behavior of complexity". Maybe we should talk.

- I like this idea. Clearly it needs some more fleshing out, but its a good direction. One thing to think about when you see modularity biologically is whether certain 'modules' can be reused multiple places. Komolgorov complexity is something that you might look at... watson

- Wendy Ham Thanks Watson, I will check out the Komolgorov reference. To Steve: Roozbeh and I started thinking about this when we discussed how some societies have evolved from having a clear demarcation between the gender roles (e.g., men work and make money, women stay home and take care of kids) to not having this demarcation anymore (i.e., gender equality, etc). So at least with regards to gender roles, these societies have evolved from being modular to being integrated. As a general rule, I tend to believe that modularity is important for allowing innovation and adaptation, which are important in a changing environment, whereas integration is good for efficiency. So, the question here, for example, is whether these societies have reached a certain level of "stability" such that modularity is no longer important. Aside from this example, people have shown that bacteria that live in changing environments tend to be modular, whereas those that live in a stable environment tend to be more integrated. Furthermore, organizations (e.g., business firms) also tend to become more integrated/tightly coupled as they mature.

- Roozbeh Daneshvar: Steven, we have a complexity landscape here that imposes where the system should stay. That is normally somewhere between order and disorder that gives the system the highest capabilities. I also associated order with modularity and disorder with dis-modularity (Wendy Ham seemed to agree with this!). Now the amount (and perhaps form) of modularity has changed. So, my intuition is that the complexity landscape (which determines the future behaviors of the system) is changed. This is what I meant by change in "behavior of complexity". I meant that the dynamics of that complex system is changed and hence, the equilibrium is somewhere that did not use to be equilibrium before this (there were some topics related to this area on Monday June 15 lectures).

- Gustavo Lacerda: Watson, Kolmogorov Complexity is a very general concept. Do you mean "motif discovery"?

- Mauricio Gonzalez-Forero: I would like to hear more about this project. Can we meet sometime?

Evolving nanomachines

Take the evolving motors animation we saw at the end of Olaf Sporn's talk, but instead put nanoscale physics, i.e. overdamped motion with Brownian noise, into the simulation. Perhaps put some basic chemistry in too. Evolve possible designs for nanomotors! What we get may include existing biological molecular motors. Or even more crazy idea: put in the physics of quantum mechanics. Steven Lade but with credits to Lilliana!

- Roozbeh Daneshvar: I am interested in this. Although I am curious to know what methods do you want to pursue for this matter? ABM? By the way, I deeply believe that this is the kind of research which determines the future of robotics!

Credit Market Simulation

Money is loaned every day on the bond and money markets between banks, corporations, and individuals. It usually works very efficiently, but, ultimately, it is driven by humans. An agent simulation could provide us with insight into what behavior patterns give rise to the booms and busts that we have been experiencing. My guess is that it boils down to how individuals estimate risk and future reward. Nathan Collins suggested a learning model for how people get habituated to reward, expecting more and more for satisfaction. However, what happens to our estimates of risk in the face of increasing rewards? When the two are out of sync, we would likely see interesting dynamics. We've come up with a few ideas for how to implement this. Nathan Hodas

- Jacopo Tagliabue: It could be interesting to embed insights on risk-seeking and risk-averse behaviour from prospect theory and behavioural economics. I am also interested in agent-based simulations of a simple economy, where agents may use different heuristics (rational decision theory, Simon's model, Kahneman and Tversky theory, etc) to decide what to do. It is often said that in the market "errors cancel each other out", leaving a optimal or quasi-optimal global outcome: but is it true? And what's the relationship between individual strategies and this dynamics?

- john paul: I'd like to throw my weight in with this one to see how this is addressed. Mr. Hodas and I have been talking about real-world risk associated with credit and defaults as noise in a system, and directed flows of current cash, credit and derivatives as three possible visualizations. Ideally we can pull out some real-world credit data and begin to construct a scale market of one economy (or sector of an economy, like government spending) and then hopefully either scale that up or adjust as needed to other data.

- Wendy Ham Do you guys consider credit default swaps (CDS) as a special kind of financial instrument - one that almost completely lacks inhibitory mechanisms and thus is able to grow indefinitely? (Analogy to cancer cells?)

Creative Process

This is a very preliminary attempt to analyze the creative process in order to identify how we come up with ideas.

Creation of ideas as a process of random combination of concepts and connections taking place in the subconscious. Most of these ideas are filtered before reaching the conscious. Those ideas that rise above the conscious are new to the individual, some of which may also be new to the world. We generally classify the latter ideas as creative. Furthermore, the creativity literature refers to ideas as creative only when they are immediately useful in solving some problem or condition.

The existing concepts and connections can be considered as nodes or agents. A new idea can be a combination of at least 2 concepts + a connection or two connections, or some superposition of them. The following rules obey at the subconscious level:

1. The random process is taking place all the time with a single combination at one time

2. Each idea (which is a newly created concept or connection) attempts to pass through a filter. It either passes through or it doesn’t. If it does pass through, the idea is recognized and the coupling between the concepts/connections is raised. Each increase is by a factor of 0.1 (starting from 0) of the existing coupling until it reaches a maximum of 1. If it doesn't pass through, it ceases to exist (however, it may reappear later and given a change in the characteristics of the filter, they may be allowed to pass through).

The rules that define the ideas that pass through are:

1. The database of filters (individual’s understanding of the external environment, self control, etc.) defined in terms of what concept and connection associations are allowed to pass through as well as 20% deviation in them. [Ques: How can the deviation of a concept be evaluated numerically?]

Using complexity theory:

1. Agent based modeling can be used to identify how newer ideas rise to the level of consciousness, how the filters affect them

2. The network analysis can be used to understand how the coupling affects the creation of new ideas (concepts/connections)

Wendy Ham: Hi Murad, there are definitely some overlaps in our interests.

The Biological Evolution and Social Learning of Cooperation

Both evolutionary biologists and social scientists have convincingly shown that cooperation can emerge and persist in human society. Although the two have employed the same methods (game theory and agent-based modeling), they have proposed different mechanisms: on the one hand, biological evolution based on kin selection, group selection, the “green-beard” effect or reciprocity and on the other, socio-cultural adaptation due to social learning. The two mechanisms act on different time scales and make different assumptions on the agents’ behavior (fixed vs adaptive) and the underlying dynamics (reproduction vs imitation). I think it will be interesting to combine the two mechanisms in a single agent-based model and to explore how they relate to each other. Following standard practice, the model will consist of agents on a spatial grid or a(n evolving) network who play a game such as the Prisoner’s Dilemma or Hawk-Dove. Milena Tsvetkova

Nice. Indeed, one can reinterpret things to some extent and understand cultural and biological evolution in similar veins. In both sorts of evolutionary processes, individuals can be assigned fitness. In the biological case fitness refers to ability to leave offspring, while in the cultural case fitness might refer to ability to be imitated by others. So, reproduction can be understood as genetic or cultural. Mainstream evolutionary biologists use these interpretations, but I wonder if they break in some cases. Mauricio Gonzalez-Forero

Mauricio Gonzalez-Forero: Mareen, Varsha and I have sketched a potential agent-based model for the evolution of division of labor. It needs more thought, and the input from social sciences people would be very valuable. The model considers two labors performed by agents and a cooperative trait. Given spatial structure and dispersal restriction, we expect the cooperative trait to allow for the division in labor to evolve. It should be straightforward to implement in NetLogo. After an analysis of the simulations, it would be neat to synthesize the model analytically. Interested people are certainly welcome to help!

Gustavo Lacerda: Mauricio, this sounds interesting.

The Emergence of Meaning and the Evolution of Language

There are several attempts in the philosophical and psychological literature (see Lewis’ work on convention and Grice’s analysis of meaning) to analyze the emergence of meaning. Most accounts (it not all) make extensive use of meta-representations, that is, the ability we have to understand other people intentions and “read” the content of their mental states. There are two problems with these theories: first, they are developed in a static fashion, while it may well be the case that the emergence of meaning is the result of a continuous, adaptive process; second, they seem to be plainly false, at least if we are willing to say that people affected by autism – and thus unable to read others mind – understand and produce meaning (see this recent paper by Gluer and Pagin). Brian Skyrms and others used evolutionary game theory to evolve proto-languages, so-called “signaling games”, to understand how meaning dynamically emerges without meta-representations (it turns out that meaning can be understood as a form of equilibrium in these evolutionary dynamics). It could be interesting to further develop these insights, adding more realistic features to AB models:

- adding noise

- explore the same game in different topologies and see if the emergent behaviour depends in some way on constraints on how agents move

- see if it is possible to evolve language with a proto-grammar

These are just some preliminary considerations. Let me know what you think! Jacopo Tagliabue

- Gustavo Lacerda: Jacopo, I'm a fan of Simon Kirby's work.

Biological Pathways

Loosely defined, biological pathways are networks of molecular interactions that achieve a specific biological function. I'm interested in using the information we already have about them in the analysis of microarray data. I have a bunch of half-baked ideas; here are two.

- Steven Lade I'm interested in one or both of these.

- Gustavo Lacerda: Me too! I'm interested in statistics in the "small n, large d" setting, sparse regression, and incorporating structural knowledge through e.g. strong Bayesian priors.

Many hits vs. critical hits

Microarrays assay 10^5-10^6 biological markers per sample. The most basic analysis is to ask whether each marker, individually, is disease-associated; common multi-marker approach is to sort the markers based on the magnitude of their association with disease, and then ask whether the high-scoring markers are over-represented in some pathways (biological interaction networks). By systematically performing an enrichment analysis on all known pathways, it is possible to elucidate which ones may play a role in disease. (cf GSEA.)

On the other hand, it is well known that the centrality of a molecule in the biological pathway is strongly correlated with its biological importance -- the lethality of knocking out a gene is related to its centrality (eg Jeong 2001). This finding has been used to study individual markers 'within' a given pathway to predict which ones would be the most biologically relevant (eg by ranking the markers based on centrality, (Ozgur 2008).

One of the drawbacks of GSEA-type enrichment approaches is that they do not consider the centrality of each marker, ie, they are pathway-topology-ignorant. To the best of my knowledge, while centrality has been looked at to examine the importance of individual genes to a given function, it has not been incorporated in enrichment analyses. I would like to answer the question "is a pathway more critically hit with disease-associate alterations than would be expected by chance alone" using a centrality-aware scoring function.

One very naive way to do this would be to simply scale the single-marker association statistic used in GSEA by the centrality of the gene in the network. This raises a question of its own, however: to what degree do the results depend on the severity of the scaling?

Anyway, that's one half-baked idea. [Resources available: tons of data; adjacency matrices for pathways represented in KEGG, BioCarta, Reactome, and the NCI/Nature pathway database; useful ancillary functions in R; a cluster for permutation testing/exploring the parameter space.]

Gene expression time-course spectra

Consider all the genes involved in a given pathway. Consider, also, a set of data that gives us the expression values for each gene at a handful of timepoints, eg, before (t=t0) and after (t=tf) an environmental exposure.

Next, suppose we describe the activity of that pathway by completely connected directed graph, for which the weight of the edge from gene_i to gene_j is given by MI(gene_i(t=t0),gene_j(t=tf)) (in the case of multiple timepoints, we could extend this -- eg transfer enropy). That is, the weight of each directed edge from gene_i to gene_j would tell us how well gene_i at t=t0 predicts gene_j at t=tf.

(I suggest the complete graph, rather than using the known pathway topology, because in practice the time differences tf-t0 may result in multiple "hops" -- so we may have correlations between next-next-neighbors rather than nearest neighbors, etc.)

So, we now have a description of signal propagation through the pathway over the time t0->tf, which we could summarize using the eigenvectors of the Laplacian. If we have two classes, eg cells which do/don't respond to the exposure, will we see statistically significant differences in the spectra for certain pathways, and thus infer that those pathways are involved in the response?

Possible pitfall: most time-course experiments only have a handful of samples for each timepoint.

Network structure of personality

Sean is interested in using the methods presented by Scott Pauls at SFI on Wednesday to analyze the structure of personality as revealed by personality psychology's canonical test, the NEO PI-R, and it's freeware version, the IPIP NEO.

These surveys consist of 240 and 300 questions, respectively, and have been analyzed using traditional factor analysis to reveal the Five Factor Model of personality (FFM, see Wikipedia article). But there is much debate within personality psychology about the exact structure of the factors, and particularly the higher order correlations among them. Traditional factor analysis is not much use in resolving these disputes, but that is just about the only method which has been used so far. I've spoken to Scott Pauls about this already, and he says that his method might be useful to help to resolve the issue (see his comments below).

I've got a dataset of about 1000 responses to the NEO-PI-R and 21,000 responses to the IPIP NEO, and I can get access to a smaller dataset which also includes some info on FMRI imaging and some other personality tests if necessary.

This is not a subject which I was originally planning on pursuing when I came to the CSSS, but I think that the central importance of this test to personality psychology means that the project will have a reasonable chance of getting published regardless of the results, and anyone working on it should learn some cool data analysis techniques along the way.

If you'd like more information about what I've written here, note that I will be talking about the subject over lunch on Tuesday the 16th. Just find my table (or avoid it, depending on your preferences).

I'm in. Marek Kwiatkowski

Murad Mithani: I would like to know more about this.

I'd like to talk more. Casey

Scott Pauls: Some comments on this idea.

I think this is a very interesting application of the PDM or some variant of it). One of the aspects of the "Five Factor model" is the controversy around the selection of the factors and their putative independence (they are not). The collection of tools we use will allow for a data driven extraction of factors on multiple scales. I suspect, although it is not a given, that the top layer of factors will reflect to some extent the "five factors" already used. However, it will give detailed information on the relationships between the pieces. Moreover, the multi-scale decomposition should yield a very textured description of the personality factors and their interactions.

A couple of technical points - given the length of the data series (200-300 questions), I would probably limit the analysis to roughly 150 respondents at a time. The wealth of data available means that one can do multiple experiments using ~150 members allowing for a good analysis of the robustness of the factor results. Guimei Zhu interested in it, i am also curious on persons.

Music Rhythm Pattern Generation with Hierarchies and Dynamics (PROGRAMMERS WANTED!)

Western based music comes in boring measures. 4 beats, 16 beats and then repeat plus a little modification. Boring!

Even exotic music from India or Bali sticks to one particular measure ... even if it's some bizarre integer, a prime number say, like 17. But what if we introduce hierarchies of measures?

So lets say a measure is one minute long. Between every beat of your 4 measure I introduce 7 beats. And between the first four of those I introduce 2 beats; between the 2nd 5 beats and between the third and fourth 3 beats each. What does that music sound like!?

Clearly there is synchrony every x beats between different patterns but in between there is something which bears some relationship over time but takes a little listening to understand.

What music is most pleasing? What do you want to hear more of? What is too complicated/random and what is too boring?

I have worked previously on such a system written in Java called the Emonic Environment. But this was many years ago and I have learned much about much since then.

What can we create now?

A few people have exhuberated interest including Murad and Casey but I need at least one or two other people who are capable of contributing to the implementation before we can go ahead with the project.

Do you find yourself fascinated by your own attraction to different sorts of rhythm? Do you sense that this summer school could be a pathway for reigniting your own passion for creativity and expression, while maintaining some connection to science? Both Liz Bradley and Peter Dodds encouraged us to nurse the flame fueled by playfulness and creation, to keep ourselves engaged by having fun and staying curious. If a group of us got together and really inspired one another with our ideas and passion, maybe we could make something compelling and bring out the curious 5-year-old latent in all of us.

What are interesting ways to create hierarchies and change them dynamically? What sort of dependence should one structural or functional parameter have on others in order to create sequences of sounds that aren't just random but rich in some sense?

- Massimo Mastrangeli: I guess Watson is referring to polyrithm(ics), which is a way of layering musical compositions with parts having each its own signature/tempo. This is traditional in some african cultures, and is anyway sometime used also in western modern music (e.g. Strawinski's "Rite of spring"; also, those who know of metal bands like Meshugga, Pain of Salvation and similar can have an immediate idea). Odd time signatures are also quite common in muzak/klezmer tradition (and progressive rock!). They bring an overall impression of dynamism and energy, given that the beat patterns can be richer and more unpredictable than in common 4 beat time signatures. I like quite a lot this type of music (you had doubt still? :) ), I could contribute to the project with my musical experience. It can be a nice occasion also to learn about new tools. The project may have some substantial physiological/esthetic components to it.

- watson: hmmm ... yes. this is exactly what I am talking about. very cool to hear you have some experience with this Massimo. right now i am leaning on going ahead with this project. i think we have serious potential to make impact, elucidate new relationships and phenomena and educate in the process. and i think it could be a ton of fun. here are a couple of links i have found which could be of use:

- this page talks about history, theory and even mentions chaos.

- this is a good source for tabla samples. tabla is one of the simpler devices that has some melodic structure as well as rhythmic structure to it. we could work with others as well... one thought is even just a drum kit of different sounds (rock style).

Rebellion

The results of Iran's recently held presidential election (June 12, 2009) is very controversial. Demonstrations are being held across Iran and some have turned violent with a few fatalities reported. Demonstrations are also being held in major cities across the world. It is reminiscent of the Iran's revolution about 30 years ago. So, here is an idea for an agent-based modeling of a rebellion; what does it take to tip the balance to successfully influence the election process for a possible re-election? What kind of networks to model the rebellious groups? Or, to take it to the extreme, what does it take to have another revolution? Mahyar Malekpour

David Brooks This seems to be the same problem as the Gossip suggestion from above. Perhaps we could combine the two adding factors such as participation hesitation to represent the stability that must be overcome to induce action (participation in gossip or revolution). Perhaps we could get together with the gossip model team to discuss the potential.

Scott Pauls There are interesting discussions in the political science literature concerning revolutions in relatively authoritarian regimes. T. Kuran has spent most of his career on such models. One of his first papers on this is T. Kuran, Now out of never: The element of surprise in the East European Revolution of 1989, World Politics, vol. 44 (October, 1991), pp. 7-48.

Mesoscopic self-assembly of passive functional components

Self-assembly is being recognized in the field of microelectronics as a viable way to assemble multifunctional systems in a cheap and efficient way. Beside speeding up the assembly procedures that are now standard (e.g. pick-and-place), self-assembly is enabling the construction of unique systems which could otherwise be not possible. This is particularly important and promising for devices whose size ranges from microns to millimeters, i.e. devices which are too large to be assembled by supramolecular assembly and also too small to be assembled by robotic assembly.

This project would aim at designing ensembles of electronic components (i.e. devices endowed with electromechanical interconnecting structures which constraint the possible arrangements) and the constraints on the physical environment that would result in the autonomous formation of standalone and functional systems. It is a type of static self-assembly, where the energy is dissipated only while the system is reaching its thermodynamical minimum energy state. I propose agent-based models which should encode physical forces among components and/or templates (e.g. gravity, capillarity, electromagnetic fields, chemical forces), and should bring about a plausible dynamics and parameter space for successful assemblies.

Guns, Germs and Steel: Modeling the fates of human societies

In his hugely influential book Guns, Germs and Steel J. Diamond tries to answer a question once posed to him by his field assistnat: "Why is it that you white people developed so much cargo and brough it to New Guinea, but we black peope had little cargo of our own?" The book is a verbal model, suggests that the fate of human society is a product of the locally available resources, such as which crops could be domesticated, and the geographic configuration of regions, which then allowed these resources to be transmitted. The book has many intriguing and testable elements. In effect, Diamond describes a network model, where success is determined by connectedness and information transfer. The ideas of GGS can be tested by taking the underlying patterns of resource distribution and feeding them into an explicitly specified the information transfer networks. You can even permute various parts of the system and see whether you would still get the same historical dynamics. Sasha

Randy Haas Sasha, I have lots of thoughts on this, and it is similar to a problem I've considered posting. I can certainly contribute an anthropoloigcal perspective on the problem, and the archaeology of agricultural origins is an area of specialty for me. let's talk about it.

Alhaji Cherif There is a nice book by Peter Turchin Historical dynamics where he studies cliodynamics and has looked at some of these questions from both empirical and mathematical models. He has written some papers too on the subjects, too. His papers might be a good starting point.

Nathan Hodas I'd like to be in on this. I've pondered a good deal about this since reading the book. Maybe we should contact Jared Diamond?

Regional language differentiation

The goal of the Dictionary of American Regional English is to capture how colloquial expressions vary across the United States, based on interviews conducted in the mid-20th century. Check out this sample entry. There is also a collection of recordings where >800 people from various regions read the same text. I am not exactly sure what one can do with this resource, but I maybe someone can come up with a good idea. Sasha

Deconstructing CSSS09

One fun and easy application of network theory would be to look at ourselves at the end of the course, using an anonymous survey. What was the social interaction network? How frequently was there discussion between disciplines and did that lead to productive final projects? Is there a link between the social and final product networks? In prinicple, these data can potentially be linked to those collected by SFI at the beginning of the summer school. This could be an interesting way to see how the summer school (and more broadly interdisciplinary interactions) actually works. These data mihgt also be useful for planning the structure/composition of future classes. Sasha

Wendy Ham: I agree Sasha, would love to help out with designing surveys, etc.

Margreth Keiler: Murad and I had the same idea yesterday, but we thought to make each week a surveys to see how the network change over time and to add also after CSSS surveys. Should we discuss our draft tomorrow at SFI?

Biodiversity, evolution, modularity--ideas from Doug Erwin's lecture

Here is a list of ideas mostly inspired by Doug Erwin’s lecture. I haven’t written anything very in depth due to lack of time but I think it would be fun to think about how to model any of these topics. Many of the topics are highly interrelated. I would recommend looking at Doug’s 2007 article on the readings page if interested.

How to model biodiversity. Why would greater bio diversity rise out of extinction?

Does evolution reach sort of a stability point when all the niches are ‘full’ and is there is a lot of competition? Does lack of competition (due to extinction or whatever) create the opportunity to diversify more?

Why does biodiversity cluster?

Two models in the paper: Genetic or developmental hypothesis: mutation driven model of change. Corresponds to ‘supply driven’ innovation in economics Ecospace hypot: variations in ecological opportunity control the success of major new morphologies. Corresponds to ‘demand driven’ innovation.

Genetic kernels

How are they developed? Why did they all develop at the same time after extinction? Why did animals develop kernels and not plants?

Modularity. http://en.wikipedia.org/wiki/Modularity_(biology)

Why do biological organisms develop modules? How many components make up one module? Is there a difference in the modularity of ‘higher’ versus ‘lower’ level organisms? (There is well studied modularity in the central nervous systems of long swimming organisms such as leeches or electric eels). Why do nonvertebrates develop locomotion modules (repeating, identical body part segments hooked together in some way to allow motion) but vertebrates do not (only have 2 or 4 legs).

Economic Geography in the Lake Titicaca Basin

See summary in the final projects section below. Group working page: Economic Geography and State Emergence

“Let it rain” - Simulating flood events by Agent-Based Modeling and GIS

How much rain is required to flood the Grand Canyon?

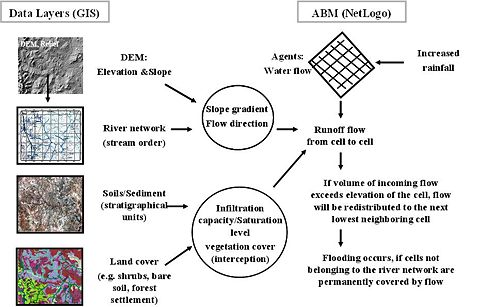

The idea is to build an Agent-Based Model to simulate the impact of increased rainfall on flow dynamics of a specific river network of the Grand Canyon region. The agent for the ABM is the water flow (=runoff) moving from cell to cell, dependent upon topography (=slope/gradients of the neighboring cells). The flow dynamics are therefore directly related to the Digital Elevation Model (DEM) of the region and indirectly to environmental parameters such as soil/substrate (e.g. stratigraphical units) and land cover/use (e.g. bare soil, shrubs, forest, settlement). The latter parameters could be integrated into the ABM by assuming a possible range of values influencing flow dynamics in relation to e.g. infiltration (if the soil is saturated, runoff occurs) and vegetation cover (high vegetation cover leads to high interception, less runoff). The different data layers can be integrated into the ABM by GIS (Geographical Information Systems).

What is the relationship between rainfall pattern and runoff/ flooding?

What effects do topographical/environmental parameters (e.g. slope gradients, substrate, vegetation cover) have on runoff/flooding?

Are there non-linearities related to the dynamical flow network?

What are possible feedback mechanisms? (e.g. positive feedback mechanism: increased rainfall → increased runoff → erosion and hence deepening of channels → steeper slope gradients → increased runoff)

Looking forward to exchanging ideas!

Steven Lade Hi Almut, As I've said, I think this is well suited to modelling with differential equations. Particularly if, as I assume to be the case, the GIS data comes already in a rectangular grid. Having said that, there are some complementary aspects for which ABM would be well-suited. For instance, following agents as they form streams, or if you were to have a localised thunderstorm. We could possibly do this in parallel and see if they match and/or use each method's particular advantages.

You may be interested in this paper, which I found through the SFI library database: [1]. I think this one is more complicated though, because they need to consider a three-dimensional water table. More generally, what sort of modelling (if any) do people usually do in these sorts of topics?

Karen Simpson I am interested in this project! I have studied these concepts in many of my classes. Through past research, I've looked at storm/rain events, and how a large runoff from stormwater causes high contaminant concentrations in streams and rivers. This research was done for urban, forest, and agricultural landuse types.(I will try to find the results of this research soon). Another thing to think about is the time between rain events. A long timespan between rainfall events will cause the soil to become unsaturated, and the next rainfall may have little effect on the stream. I also will not be around much this weekend, so would it be possible to meet sometime tomorrow (Thursday 6/18)?

Scalable (parallel) Spatial Agent-Based Models

This project idea is an exploration of what happens to agent-based models “in the large?” For example,

- As the number of interacting agents in a model increases, what happens to the dynamics of the model?

- What happens as the size of the agents’ domain increases (e.g. simulating a neighborhood versus simulating a city or country)

- How do the properties of the model change? Are there scaling laws in effect ?

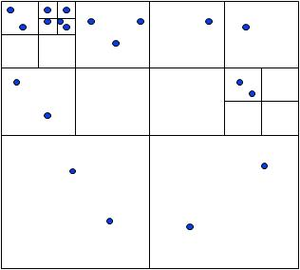

In order to investigate these issues, we need a scalable simulation, i.e. a parallel implementation of the model that allows us to introduce arbitrarily large numbers of agents. There are many approaches to doing this [lit review needed here!], but for this project, I would like to focus on spatial agent-based models: models where there are N agents who exist in a geographical domain and possess “vision,” where vision can be optical/eye-based, local communications (audible or electromagnetic line of site). A couple such models which can serve as starting points include the flocking model (aka “boids”) and Epstein’s model of civil violence (or its derivative “Rebellion” model).

The idea is to decompose the spatial domain into independent subdomains, distribute those subdomains to nodes on a compute cluster, amalgamate the results, wash-rinse-repeat. One possible approach is to use an adaptive mesh refinement (AMR) such as those used by engineers for finite element analysis or by physicists in hydrodynamics simulations. One concrete example, using a quad-tree decomposition to keep agent density constant on each processor (and thereby keeping computational load balanced), is as follows:

I have a cluster available for implementation, along with the MPI libraries for parallel programming. Other suggested areas of expertise that would greatly benefit the project include: Someone interested in evaluating simulation results, who can help ensure that we don’t break the model by decomposing it. Someone interested in analysis, for exploring the effects of scaling on the model. Someone interested in high-performance computing, to help with programming (probably c/c++ with MPI)

From talking to folks in our class, some other benefits of the approach include

- improving running time for very-long-running simulations

- aerospace applications—decomposing the National Air Space into computationally tractable subdomains for modeling or real-world purposes.

- Applying the decomposition technique to other model domains. For example, can a similar technique be used to decompose a social network, especially if a single model has both geographic spatial domains and also network domains?

Other approaches suggested by classmates have included implementation on GPUs (graphics processors used for general purpose computation) and sticking to an SMP implementation (multicore workstations with shared memory--simpler implementation/perhaps not as scalable), versus a distributed-memory cluster. I welcome further ideas that might help kick-start this zany scheme.

Steven Lade What I'm about to say seems kind of obvious, and I'm not sure it helps you at all, but I can't help but say that if your 'averaged behaviour' converges for very large numbers of agents, you'd in effect be modelling some partial differential equation.

Matt McMahon Thanks, Steven. Not obvious to me though ... Can you elucidate?

Steven Lade It seems that as you reach a large number of agents, and your grid becomes small, you'd most likely reach some partial differential equation in the density of agents. Say a diffusion equation. Or a Navier-Stokes (fluid flow) equation. Not sure how easy it would be to derive, but this would be my intuition. It would be easiest for local interactions only (i.e. some radius which you could let approach zero) but non-local interactions might be possible too. It would of course all depend on the agent rules you use. If you're lucky one might even be able to derive some analytical results for special cases. If you want to chat more, find me in person. (Anyone: does this allnmake sense?)

Resilience to invaders in social systems

A piece of anecdata from my organizing days: the effect of an external organizer coming to help on a local campaign had one of two -- very different -- effects: either further coalescing the local campaign, or fragmenting it.

I'm curious how well social structures tolerate interlopers and what drives their resilience.

Possible metaphors/methods which could be useful:

- An agent-based models of the connectivity of the underlying social structure & reaction to interloper?

- Analogizing to food-web/ecology with the interloper as an invasive species?

- Analogizing the interloper to a crystal defect?

BUT I have no idea 1) how to parameterize this and 2) whether there are data (of any sort -- eg resilience to colonists/prophets/carpetbaggers) to which the model could be compared for sanity-checking.

I know nothing about sociology & related fields, so maybe this is a well-studied problem. Or an ill-posed problem. Or maybe it's not a problem at all. In any event, I'd be curious to hear other's thoughts.

Final Projects

Please place your final project ideas here: details should include clear and objective outlines.

The Effect of Gossip on Social Networks

In this project we look at the effects of gossip spread on social network structure. We define gossip as information passed between two individuals A and B about an individual C who is not present, which has the potential to affect the strengths of all three relationships A-B, B-C, and A-C. This work is novel in two respects: first, there is no theoretical work on how network structure changes when information passing through a network has the potential to affect edges not in the direct path, and second while past studies have looked at how network structure affects gossip spread, there is no work done on how gossip spread affects network structure.

Page: Modeling gossip networks

Members:

1,2,3, language!

In a nutshell: In this project we will make use of information theoretic measures of similarity between data sets, such as mutual information or more specifically some global allignment methods coming from evolutionary biology to build up a distance matrix between languages. The data under study are simply the numbers 1,2,3...,10, for which we have access to a massive dataset that enumerates the spelling of the first ten numbers in more than 4,000 languages. We will finally derive the phylogenetic tree of languages, and compare it with the state of the art.

Members:

Social mitosis in group conversations: a cooperative phenomenon approach

In a nutshell: When you participate in a conversation, you typically expect to (i) actively participate and (ii) be confortable in it. These arguments somewhat put some constraints in the number of persons attending the same conversation. In other words, when people are forced to stay in the same confined space, they tend to undertake a conversation, however if too many people are present, the conversation rapidly splits in two, three... some nucleation phenomenon takes place. In this project we approach this subject from a complex systems point of view and want to understand if the 'conversation mitosis' is a collective phenomenon, much in the vein of a symmetry-breaking phenomenon in statistical physics. We will develop an agent based model that captures the essential mechanisms of conversation dynamics and will characterize such behaviors. Analytical developments will also be addressed. Finally, we will compare our analytical/numerical results with empirical data gathered through e-mail surveys.

Members:

Modeling mesoscopic sequential self-assembly

One of the reasons for the huge success of microelectronics is the ability to produce very large amounts of devices at very small price. Anyway, a large part of the final price of electronic devices is due to assembly and packaging issues. The standard procedure to package microdevices is by robotic or even manual manipulation, which while satisfactory for large sizes becomes inefficient and even practically incontrollable below the millimeter scale. Moreover, when dealing with very large amounts of components the task becomes time-consuming and this expensive.

Self-assembly techniques have the potential to boost electronic assembly by their intrinsic massive parallelism and advantageous scaling properties. Particularly, self-assembly performed in liquid environment has gained momentum by showing interesting performance. Anyway, the analytic modeling of the dynamics of this process is still limited and not capable of capturing the details of the stochastic dynamics of self-assembly. In this project, I want to simulate the dynamics 2D and 3D sequential self-assembly with agent-based models. This framework, never so far applied to this task, may help sheding light on the role of important parameters of the process such as dimensions of the assembly space, redundance of components, viscosity of the fluid carrier.

Percolation-like phenomenon in the Google search engine

In a nutshell: Type a (short) random string of letters in Google. This mimics the effect of mispelling words, 'typos'. Surprisingly, you will find a non-null amount of results: the probability of finding such a word, even if it's a random string without a semantic meaning, is non-null, since (i) someone could have already 'invented it' (acronym or so), (ii) someone could have mispelled a word (committed a typographic error) in his/her website/blog etc. But repeat the procedure with larger strings, and look how the number of results rapidly drops to zero... Is this a phase transition? Can we characterize such phenomenon? What are the relations between language-like properties and this behavior? What information can we extract? In this project we will endeavor such questions, programming automatic queries to google of randomly-generated strings and relating the system's behavior to some collective phenomena such as Percolation.

Members:

Competitive spatial network growth

Many large-scale aggregate networks are actually composed of several essentially independent subnetworks, each of which takes into account the other agents' actions. While traditional optimization methods yield insight into the most efficient network structures to satisfy a fixed objective, the presence of several overlapping and evolving networks may change the optimal strategy or create niches for otherwise suboptimal strategies. In this project we develop an agent-based network growth model to simulate competitive airline network growth, studying the effects of the demand distribution, entry time, and number of agents on the success and network structure of the agents.

Interacting distribution networks

Members:

Spectral clustering of gene expression

1. Can we differentiate between genes involved in separate biological functions (ie pathways) using spectral clustering?

2. If so, can we use this method to detect the genes activated in cancer?

Members:

Foraging on the move

Many animals (e.g. caribou, wildebeest) forage in groups while moving from one location to another. This means individuals have to simultaneously balance several demands: finding the best resources, maintaining the cohesion of the group, and migration in a certain direction. While there is a vast literature on both flocking and optimal foraging, there has been no work done to understand how animals should trade off the decision to flock or forage (since it is difficult to do both simultaneously) during migration. We develop an individual-based model to address this, and implement a genetic algorithm to find the best decision-rule for switching between foraging and flocking, under a variety of conditions.

Page: Foraging on the move

Members:

“Let it rain” - Simulating flood events by Agent-Based Modeling and GIS

How much rain is required to flood the Grand Canyon?

The idea is to build an Agent-Based Model to simulate the impact of increased rainfall on flow dynamics of a specific river network of the Grand Canyon region. The agent for the ABM is the water flow (=runoff) moving from cell to cell, dependent upon topography (=slope/gradients of the neighboring cells). The flow dynamics are therefore directly related to the Digital Elevation Model (DEM) of the region and indirectly to environmental parameters such as soil/substrate (e.g. stratigraphical units) and land cover/use (e.g. bare soil, shrubs, forest, settlement). The latter parameters could be integrated into the ABM by assuming a possible range of values influencing flow dynamics in relation to e.g. infiltration (if the soil is saturated, runoff occurs) and vegetation cover (high vegetation cover leads to high interception, less runoff). The different data layers can be integrated into the ABM by GIS (Geographical Information Systems).

What is the relationship between rainfall pattern and runoff/ flooding?

What effects do topographical/environmental parameters (e.g. slope gradients, substrate, vegetation cover) have on runoff/flooding?

Are there non-linearities related to the dynamical flow network?

What are possible feedback mechanisms? (e.g. positive feedback mechanism: increased rainfall → increased runoff → erosion and hence deepening of channels → steeper slope gradients → increased runoff)

Members:

Update and own page following soon!

Creative Process

The project attempts to model the generation of ideas in the subconscious as a random combination of existing concepts (reflected as words) and their selection (reflected as variance). The selection filter determines the quality and quantity of ideas that rise to the conscious. Although the complete model may not be in place by the end of the week, the presentation will display a basic version of the final (and hopefully publishable) paper.

A Markov Model of Elite Factionalization

Authoritarian regimes fracture when elites within the ruling coalition, which buttresses the dictator, defect. Consequently, regime change crucially depends on elite competition and coordination. Previous work has explored this topic through conventional formal models that make exacting informational and cognitive demands on agents. In contrast, this model will attempt to replicate these findings, while exploring additional dynamics and emergent behavior, by embedding boundedly rational agents in a Markovian system. Rather than assume hyper rational actors, capable of solving difficult dynamic programming problems, I assume that elites use relatively simple heuristics to navigate a stochastic environment.

Radicalization Network (RadNet): Spread Mechanisms and Control

Spread of radical ideologies is a key source of terrorist activities. Coordination and management of resources for preemptive control of such activities require network models that enable predictive identification of areas and agents before occurrence of terrorist action. Our RadNet project develops a layered network model where the dynamics of radicalization are used to define an evolving radical network layer that exploit an underlying social network layer. We develop quantitative models for change of state of nodes (representative of individuals in the social network) that capture progress of radicalization through intermediate stages of general, susceptible, indoctrinated, and radical. We analyze the model and its robustness to control actions by modeling in netlogo. The models developed and analyzed in RadNet, builds on insights from the contagion model, and introduces novel modular layered network approach to capture exploitation of social network for spread of ideology and control networks for monitoring and preemptive actions on the evolving radicalization network.

Members:

See Radicalization

Guns, Germs and Steel: A Simulation

We intend to model the spread of early human populations. In his popular work--Guns Germs, and Steel--Jared Diamond argues that the differential concentration of wealth and people in geographic space is a result of geographic and historical contingencies. Using net logo we will model the dynamics of hunter-gatherer spread and the emergence of early agriculture. Global bioproductivity maps will be used to quantitatively represent resource habitats.

Members:

Spiking Networks on the Cusp of Chaos

The objective of this project is to investigate what small networks of idealized spiking neurons look and behave like at the edge of chaos. Specifically "edge of chaos" here means that special boundary area between the regions where we observe orderly periodic behavior on one side and chaotic non-periodic behavior on the other. (both have been observed previously in this dynamical system)

More details here: Spiking Networks on the Cusp of Chaos

Game theoretic strategies in bike racing

Cooperation is often a paradoxical component of human groups. Seemingly altruistic behavior can often be explained by selfish strategies. The cultivation of cooperation in humans is crucial for solving the world's pressing problems such as climate change and international disputes. Apparent cooperation often shows up naturally in sports and is often attributed to social norms. Here we intend to set up the basic elements of a bike race where each individual is motivated to win, but can benefit through cooperation with other riders. Each rider will be given a set of skills and a cooperation strategy. The analysis will include competition among different strategies and an understanding of the emergent level of cooperation from only race environment (no social norms). We hope that the differences in qualitative properties between the modeled race and real races might teach us about the presence of social norms even in these competitive environments.

Members:

Study of Sustainability of the Companies, Cities and Societies: Towards the Theory of Social Organizations.

=BOEING AS A CASE STUDY=====

The first phase of this quantitative study will commence by analyzing Boeing as a complex adaptive system but with a view to start building a broad fundamental, quantitative, predictive theory of social organizations, with the help of the successful body of work that has already begun at SFI. A major component is to understand the role of innovation and adaptability in shaping the growth and sustainability of cities to corporations. Why Boeing? Boeing itself is largely a structure facilitating and integrating the flow of information through the complex organization network consisting from its many divisions and its multi-tiered multi-national suppliers chains. Boeing’s outstanding longevity (has thrived for 100 years while others failed), outstanding complexity, high propensity for innovation and the availability of the data recommend the company as the perfect case study, from which in the future a bridge can be build towards developing some universal laws of sustainability. The study will attempt to identify the reasons for the system’s longevity, to quantifiably measure its robustness to perturbations and to define the strategy for its optimization and sustainability as a complex network. The study will attempt to look at this complex network’s topology and at the dynamics of the information flow across the network, studying the impact this dynamics has on technologies and speed of innovation within the company. The study will look at the efficiency of the company’s internal communication and especially try to determine the ways of the optimization of the information flow from the bottom up, with the goal to increase the speed of innovation and economic productivity. The study will attempt to answer some specific questions, such as for example: how the number of internal patents has been growing with the growth of the company’s size? Can out of this data some dynamic laws be derived and what are the strategic implications and innovation expectations for a company of a certain size? The study will especially focus on those areas of the system’s landscape where the knowledge spillover occur and will especially try to determine in quantifiable terms the rates of technological improvement due to the knowledge transform. The analyses of the data on learning curves, design structure matrices and patents (preferably all of the above for several different models) could be very useful in further investigating questions surrounding how technology characteristics (such as modularity) affect rates of technological improvement.

Members:

Leverage in Equities Markets

When people get excited about their prospects on the stock market, they borrow money from the bank to invest. This leverage effectively couples the bank to the stock market. Thus, interest rates determine demand for stock, and demand for stock can determine interest rates. Does this interplay cause traders to naturally find a stable balance of leverage and aggressiveness? How do the behavioral traits of traders influence the stability of these interactions? Are there regulatory behaviors, such as limiting leverage or slowing margin calls, that would contribute to the overall health of the market?

Members:

(provisionally Corinne Teeter)

Deconstructing CSSS09 Social Network

CSSS09 is a group of people interested in complex systems who are randomly chosen to attend the one month summer school. The international and interdisciplinary group spends four weeks together learning, discussing and working on projects related to complex systems. We are interested in understanding the following questions:

- What is the social interaction network?

- How does the network change over time?

- What other factors influence the evolution of the network?

- How frequently was there discussion between disciplines and did that lead to productive final projects?

- Is there a link between the social and final product networks?

Status

Stage 1: First survey (profile, social contacts, future collaboration) – after 2nd weeks

Stage 2: Second survey (language, social activities, social contacts, future collaboration) – end of 3rd week

Stage 3: Network visualisation in NetLogo (proto type) & discussion on approaches for further analysis of data

Stage 4: Survey at the end of 4th week and one month after summer school

Members

Acupuncture points networks

Background and description

_Acupuncture is a technique of inserting and manipulating fine filiform needles into specific points on the body to relieve pain or for therapeutic purposes. The word acupuncture comes from the Latin acus, "needle", and pungere, "to prick". In -_Standard Mandarin, 針砭 (zhēn biān) (a related word, 針灸 (zhēn jiǔ), refers to acupuncture together with moxibustion). According to traditional Chinese medical theory, acupuncture points are situated on meridians along which qi, the vital energy, flows. There is no known anatomical or histological basis for the existence of acupuncture points or meridians. Modern acupuncture texts present them as ideas that are useful in clinical practice. According to the NIH consensus statement on acupuncture, these traditional Chinese medical concepts "are difficult to reconcile with contemporary biomedical information but continue to play an important role in the evaluation of patients and the formulation of treatment in acupuncture."

Objective