Complex Systems Summer School 2019-Projects & Working Groups

From Santa Fe Institute Events Wiki

Project and working group ideas go here.

Two ideas from Cat

The first two ideas are related to datasets that I can make available. I am dedicated to publishing results from both- and co-authorship is welcome if you are interested.

This first idea relates is a Natural Language Processing project with spatial aspects. I have gathered all 482 city and 58 county general plans for California. I have these plans available as both PDFs and with text extracted. These are 400+ page documents that communities put together in order to set the course for developing housing, transportation systems, green space, conservation, etc. This dataset is exciting because no state has a database of city/county plans- and these plans govern land-use. California offers an interesting case because there are mountains, beaches, rural areas, agricultural areas, dessert landscapes and the coast. Each landscape and population will require unique planning. We could use the dataset to answer a variety of questions. We could ask some simple questions with sentiment analysis (who wrote the happiest plans? Are rural areas the most disparaging in their plans- or are urban areas?) We could train a model on state recommendations for plans and see which plans fit (my hypothesis is that plans closest to Sacramento, the state capitol, fit the best). The take away would be that providing 'best practices' for planning is difficult because places and communities are so different in resources and objectives (eg. most rural areas do not want population growth, many urban areas measure success by population growth).. We could also take a topical approach. How much housing is each city/county planning to build in housing-stressed California? How do plans talk about fire prevention management (eg. in the context of housing? transportation? forest management?). How are communities planning for GHG reduction (with a focus mainly on air quality? A focus mainly on transportation? what about energy systems?)

The second project relates to my dissertation and builds into the science of cities. This project would use spatial regression. I hypothesize that cities are like coral reef ecosystems where structural complexity begets more habitat niches and more species diversity, leading to greater total ecosystem resilience g. faster recovery from disease or disaster). I hypothesize that cities might be the same way- more structural complexity (longer urban perimeters in the case of my dataset- but we could use 3d city models as well) would lead to greater land-use diversity and more job diversity- which would help protect against economic downturn. None of the data is normally distributed- so the spatial regression is challenging.

Added by Jessica: So a way that we could evaluate the complexity and information is a method called ascendency. It is basically the same information index calculated Joshua Garland showed us and informs us about the diversity of the networks. Interestingly, years ago when I plotted this information against productivity/Biomass/energy, it got some Lorenz patterns. If we could find a way to model a perturbation in the system, that would make for some interesting predictive analysis.

Interested Participants

Jessica Brumley Dee Romo

Dangerous idea about reviewing

Dan and I came up with this really dangerous idea to break academia over lunch. Reviewer # 2 is AI: We could use existing publications (eg. PlosOne) to train a model. Any paper that is uploaded for review would be reviewed by AI Reviewer #2. The review would take minutes, and would likely result in rejection or accept with modification. The AI could tell you where your paper fits in the broader scholarship on this topic. Does your paper bring together unique disciplines/ideas or test new hypotheses? How many papers have already been published on this topic- and how do your findings compare with regard to sample size, methodology, spatial and temporal context? In essence, have you found an anomaly- or is there more evidence to support a general theory. Where publicly available data exists, the AI could repeat analyses to verify findings. The AI could easily tell you where you have missed out on citing important works- or have been biased in citing the later work of a man over the foundational work of a woman or person of color (eg. everyone cites Robert Putnam for social capital and not Jane Jacobs). Such a reviewer would provide sentiment analyses by discipline (eg. Economics still loves Garrett Hardin's Tragedy of the Commons over Elinor Ostrom's work on the Commons. But all other disciplines are ready to kill Hardin's work) The second phase of this would use predictive modeling. reviewer #2 would write papers- predict new theories. This work would start with literature reviews (as any good PhD student would)- and then move into analyzing public datasets to answer new questions. We could check in after 10 years of human publication time had elapsed (eg. about 5-10 papers)- or 50 years... and see where science went. We could toggle the inputs (more hard sciences or more social sciences) to see how this changed the output and trajectory of science. The real world application could mean that we could do science with very little funding- and we would all be out of a job.

Emergence of cooperative strategies by means of game warping, using network science

(From Shruti)

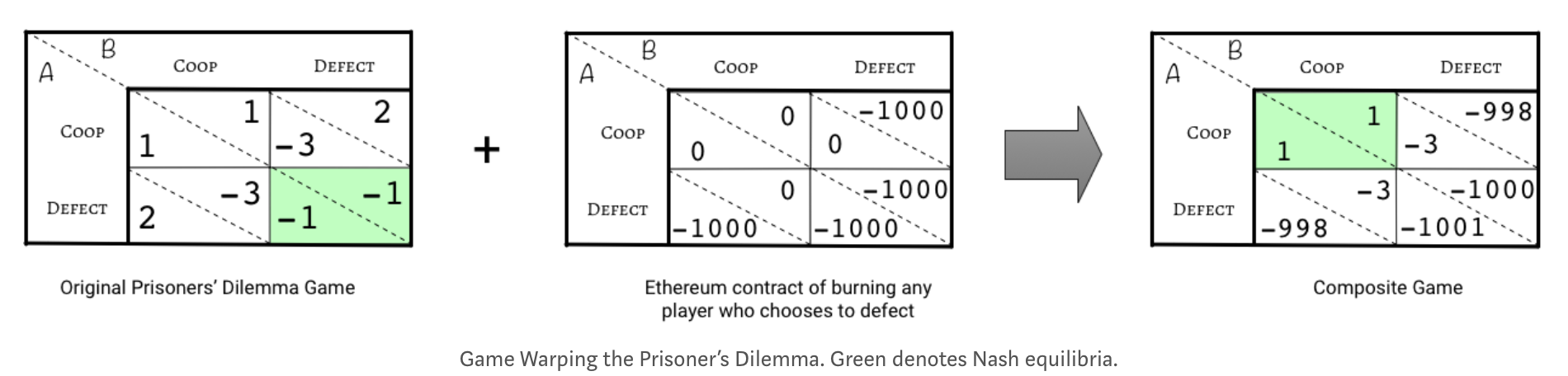

What if players can transform a noncooperative game to a cooperative positive-sum game? This is possible in certain digital economic systems (such as those on a blockchain) because all contracts are strictly enforceable. These type of "game-warping" transformations are interesting because given any economic model with pre-defined rules, the agents are able to develop unforeseeable cooperation strategies, form coalitions, and expand the scope of potential actions over time. Effectively, players are collectively able to overturn the system dynamics. The economy evolves because the economic rules effectively change w/ time (anyone play Baba Is You?). "Game warping" is defined as using transparent, triggerable, unstoppable punishments to move game-theoretic equilibria. We can extend this to multiple players and model the system using a graph/network, to explore what different cooperation strategies emerge. I trust that studying these systems at a macro-level, using simulations or networks will bring greatest degree of insight and set this research apart. David Wolpert's (SFI) work on "game mining" is also relevant. <ref>https://www.santafe.edu/news-center/news/wolpert-aaec-game-mining</ref>

Citation: https://medium.com/@virgilgr/ethereum-is-game-changing-technology-literally-d67e01a01cf8

Citation: https://medium.com/@virgilgr/ethereum-is-game-changing-technology-literally-d67e01a01cf8

Simulating evolution of bacterial cells’ decision to divide

(From Kunaal)

How do cells decide when is the right time to divide? From a purely efficiency-based perspective, cells can obtain nutrients at a rate proportional to their surface area, but nutrient requirement for growth has a rate proportional to volume of the cell. Thus, there will be a cell size that is optimum for division.

The problem with this reasoning is, cells will tend to divide at the same size on average, irrespective of their initial size. But we know that in most bacterial species, cells that start out small (large) tend to divide at a size smaller (larger) than the average size at division.

This indicates there is a different reason behind cells’ decision to divide. It is an optimal path chosen by evolution, and I intend to simulate cells susceptible to mutations under different conditions to understand how this division mechanism arises through evolution and why it is optimal.

Join #cell-division-sim on Slack if you are interested.

Modelling the spatial diffusion of human languages

The diversification of human languages is a bit like speciation in biology: using comparative and cladistic methods, linguists can group languages into language families and further subgroup them into "phylogenetic" trees or networks. At the same time, we know where these languages are spoken today. The question, then: putting these two sources of data together, can we model the diffusion of languages over physical space and work backwards from the present day to infer the most likely homelands of the corresponding protolanguages? Can the predictions of such a model be made to align what we otherwise know about human migrations in the past? And most importantly (I think), from a complex systems perspective: what facets of the processes of linguistic diffusion and diversification are universal (i.e. not due to accidental historical events)? We could start with a simple random-walk model and take it from there. Slack channel is #language-diffusion.

First meeting: Friday 1pm, lecture room

Data

Papers to read

- Let's add them here

Interested participants

- Henri

- Dee Romo

- Kenzie Givens

- Ritu

- Harun

- Let's add ourselves here

Future plans

This is (or can be, if we want) a somewhat ambitious project. I'd be happy to continue working towards a publication after CSSS.

Butterflies in Water: Optimal Perturbations for Mixing in Treatment Processes

This idea came from Liz Bradley’s last lecture and her showing us the 2D hurricanes in a box experiment and adding the “butterflies”.

Water treatment processes often need perturbations to mix the water, especially if you need to oxidize and precipitate out a contaminant (iron is a common example). Ultimately you want to do this in the most energy efficient way. The goal when building these systems is to expose the water to the surface area and mix in oxygen (from the atmosphere) for as long as possible. There are various ways to do this: make large surface area ponds; make a “Stream like” pond to make the water flow longer; add small dams for the water to go around; Some people have tried adding poles/sticks to the water; etc. It is yet to be understood which is the most successful method or which might be the optimal level of perturbations for mixing. Could agent based modeling help? Does the mixing and oxidation processes express chaotic behavior?

This is a project that I am seriously thinking about engineering a laboratory model to test as well.

Interested Participants

Jessica Brumley

Computational Social Science in Decision-Making: an Opioid Epidemic Case-Study

Project write-up from Slack: As a part of my (Kyle Furlong) work, I’ve been developing a tool/application that uses computational social science/agent-based modeling to help decision-makers make better data-driven decisions. I’m using the opioid epidemic as a “case study” for this tool. Using NetLogo and R (RShiny), the tool allows the user to explore the multiple social science theories that describe addiction and perform what-if analyses to determine which public policies/programs might be most effective in reducing negative outcomes (overdoses, deaths, etc).

I’ve got an early prototype UI/code (pictured below) running and have built in some basic theories of addiction that I’ve pulled from the literature, but I’d love to collaborate with anyone who is interested in the topic (addiction, drug use, public health), the methods (NetLogo/ABMs, social networks), and/or the approach. Open to informal coffee/not coffee drinking groups to crowd-source on a conceptual level or more technical groups working to improve my admittedly unrefined addiction models.

Communication Channels

Slack Channel: #compsocialsci-opioids

Meeting Schedule & Notes

TBA

Interested Participants

Shamelessly pulled from the whiteboard after the project brainstorming session on 6/13/2019:

- John Malloy

- Winnie Poel

- Robert Coulter

- Fabian Dablander

- Dakota Murray

- Xin Ran

- Dee Romo

- Pablo Franco

- David Gier

Science Policy & Communication

How is information transferred from scientists to policymakers to constituents? How much information is lost in translation from scientific papers to news articles and tweets? This group will explore the (potential) information loss along each transition, along with other policy-based issues that will emerge from the interaction between scientists and policymakers.

Direct questions to John Malloy (Slack preferred)

Communication Channels

Slack channel: science-policy

Interested Participants (taken from Slack)

- Andrew GB

- Chris Boyce-Jacino

- Dakota Murrary

- David Gier

- Jackie Brown

- Mackenzie Johnson

- Elissa Cohen

- Jessica Brumley

- Majorie

- Mikaela Akrenius

- Aabir

- Kyle Furlong

- Patrick Steinmann

- Ritu

Modeling and predicting food insecurity using a resilience lens

or Can complex systems help feed the hungry?

Slack channel: food-security

Over 800 million people are hungry today, and vulnerable to drought, floods and crop-disease driven by climate change. I’m interested in modeling the incidence of hunger as a dynamic, stochastic system using a resilience lens. Would like to see if we can predict the incidence of hunger in response to shocks using a neural net. Got some data to play with and open to exploring different models and predictive algorithms. If we get some promising results, we can showcase them to policymakers at USAID and the World Bank who are very interested in this space.

Participants

- Erwin Knippenberg

- Travis Moore

- Ludvig Holmér

- Andrew Gillreath-Brown

- Alexander Bakus

- Pam Mantri

- Dan Krofcheck

Modeling MinceCraft's Crafting Web

Map the web of natural resource use in Minecraft and its hierarchy of dependencies, including the potentially circular dependencies (ie you need spider silk to make a bow, which you can then use to kill spiders). Can then infer which resources are most used, their trophic level, and what tools are required to produce them.

Participants

- Kate Wootton

- Alexander Bakus

- Chris Quarles

- Patrick Steinmann

- Erwin Knippenberg

Looking for resilient patterns in Conway's Game of Life

Resilience to environmental challenges is one of the hallmarks of life. The goal of this project would be to search for patterns in Conway's Game of Life that can cope with external perturbations and self-organize back into their original forms. Conway's Game of Life[1] is a cellular automaton that has raised a lot of attention due to the life-like forms that it generates. Cellular automata are computational models composed of a grid of cells that can be on either of two (or more) states. At every generation, each of these cells can change according to the state of their neighbours. Interestingly, Conway's Game of Life is Turing-complete, meaning that it can compute any computable function, including the Game of Life itself [2]. For this reason, one should expect to find a wide range of interesting patterns, including those that can detect external perturbations and repair themselves. By finding them, we would be providing a compelling example of one of life's key traits as an emergent behaviour in a simple computational environment.

[1] https://www.youtube.com/watch?v=ouipbDkwHWA

Participants

- Alexander Schaefer

- Dan Krofcheck

- Kazuya Horibe

- Arta Cika

- Elissa Cohen

- Luther Seet

- Patrick Steinmann

- Germán Kruszewski

Analyzing Collaboration Throughout CSSS History

How has the nature of collaboration at CSSS changed over time? Using project and participant data from the last 20 years of the program, we plan to explore how topics and group structures have changed over time. Have groups become more interdisciplinary? Is there a pattern to the types of projects that individuals from particular fields tend to work on?

Participants

- Dakota

- Emily

- Fabian

- Jackie

- Kyle