Georg M Goerg

From Santa Fe Institute Events Wiki

My path to SFI

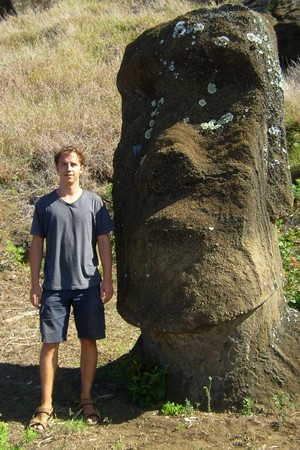

I am a PhD candidate (starting 4th year) in Statistics at Carnegie Mellon. I received my masters in mathematics (applied / econometrics / time series) from the Vienna University of Technology, Austria and before coming to the US, I spent a year in Chile teaching statistics (mainly time series) at PUC. For more details you can visit my website. You can email me at "my_3_initials_in_lowercase"@stat.cmu.edu.

I am very eager to participate in the CSSS; especially because of the inter-disciplinary research / collaborations on real world problems with people from many backgrounds - that's what statistics is all about (at least for me). So I am looking forward to meeting all of you and I am sure we'll have a great month ahead of us.

Research Interests

In my thesis I work on local statistical complexity (LSC) - a measure of interestingness for spatio-temporal fields. We develop the statistical methods and algorithms to i) forecast a spatio-temporal system, and ii) discover patterns automatically solely from the data. We do this using modern non-parametric statistical / machine learning techniques with good properties for any kind of (complex) spatio-temporal system.

One reason why I work on spatio-temporal systems is that I have always been drawn to time series (a la "My interest lies in the future because I am going to spend the rest of my life there. ” - Charles F. Kettering) and methods that try to solve real-world problems. These include time series clustering, forecasting, blind source separation techniques for forecastable time series, time-varying parameter models. Another side-project are skewed and heavy-tailed distributions, in particular how we can transform random variables to introduce skewness and heavy tails. And as a statistician what's even more relevant to me is how can I reverse this transformation so I can take data and remove skewness, remove power laws, remove heavy tails.

I do all my statistical computing in R -- for user-friendly code and R packages (two so far), and Python -- for huge data tasks.

In my spare time I like to play soccer, volleyball, salsa dancing, traveling, ...

SFI Project

Math / Statistics

Conceptual view

Parke [3] proposes an error duration model (EDM) as the driving force for long memory processes and herewith gets an elegant and very insightful representation and justi�cation for the { non spurious { occurrence of long memory processes: The basic mechanism for an error duration model is a sequence of shocks of stochastic magnitude and stochastic duration. The variable observed in a given period is the sum of those shocks that survive to that point.

The point of this process is that the distribution of the (unobserved) survival times determines the correlation structure of the observed series. Thus vice-versa we should be able to infer the lifetime distribution of the shocks from the correlation structure. The point of this is that in practice we don't observe neither the individual shocks nor their lifetime, but we can estimate the correlations of the observations. Thus in principle it should be possible to infer/estimate the lifetime distribution only from the counts.